The History of Computers

The history of computers is a fascinating journey that spans centuries, encompassing a multitude of groundbreaking inventions and innovations. From simple counting tools to sophisticated electronic devices, computers have evolved to become indispensable in our modern world. This article explores the key milestones in the history of computers, tracing their development from ancient times to the digital age.

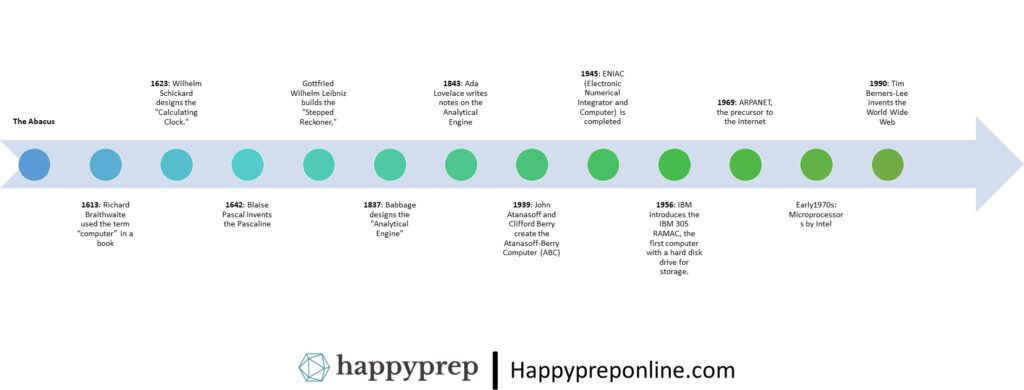

The progress in development of various stages of computer is explained below:

1. The Abacus

The abacus, one of the earliest known computing devices, dates back to ancient times. Used by various civilizations, including the Greeks, Romans, and Chinese, the abacus allowed users to perform basic arithmetic operations. It consisted of a series of rods with beads that could be manipulated to represent numbers.

2. Mechanical Calculators

Mechanical calculators emerged during the Renaissance, with early versions designed to perform complex mathematical calculations. Notable examples include:

- Blaise Pascal’s Pascaline (1642): A mechanical calculator that could perform addition and subtraction using gears and wheels.

- Gottfried Wilhelm Leibniz’s Stepped Reckoner (1694): An improved calculator capable of performing multiplication and division.

These early calculators laid the groundwork for more advanced mechanical computing devices.

1. Charles Babbage and the Analytical Engine

Charles Babbage, an English mathematician, is often considered the “father of the computer.” In the 19th century, he designed the Analytical Engine, a mechanical device capable of performing any arithmetic operation. The Analytical Engine incorporated key concepts of modern computing, including programmable instructions, loops, and conditional branching. Although Babbage never completed a working prototype, his ideas profoundly influenced the development of computers.

2. Ada Lovelace and the First Computer Program

Ada Lovelace, a mathematician and collaborator with Babbage, is credited with writing the first computer program for the Analytical Engine. She developed algorithms to perform complex calculations and recognized the potential for computers to go beyond arithmetic operations. Lovelace’s work demonstrated the concept of programmable computing and inspired future computer scientists.

Early Electronic Computers

The 20th century saw the development of electronic computers, which used electrical components to perform calculations. These early computers were massive and complex but represented significant advancements in technology. Notable early electronic computers include:

- Atanasoff-Berry Computer (ABC) (1939-1942): The first electronic digital computer, designed by John Atanasoff and Clifford Berry. It used binary representation and electronic circuits to perform calculations.

- Colossus (1943): A British computer developed during World War II to decrypt German messages. It was the world’s first programmable electronic digital computer, aiding in codebreaking efforts.

- ENIAC (Electronic Numerical Integrator and Computer) (1946): An American computer designed for the U.S. Army to perform complex calculations. It was one of the first general-purpose electronic computers and used thousands of vacuum tubes.

The Invention of the Transistor

The invention of the transistor in 1947 by John Bardeen, Walter Brattain, and William Shockley at Bell Labs revolutionized the field of electronics. Transistors replaced vacuum tubes, offering greater reliability, smaller size, and lower power consumption. This breakthrough paved the way for the miniaturization of computers and the emergence of new computer architectures.

1. Mainframe Computers

The 1950s and 1960s saw the rise of mainframe computers, large-scale systems designed for high-volume data processing and multi-user support. Mainframes were used by governments, corporations, and research institutions for tasks such as payroll, data analysis, and business operations. Notable mainframes include:

- IBM 701 (1953): IBM’s first commercial computer, designed for scientific applications.

- IBM System/360 (1964): A family of mainframes that introduced the concept of backward compatibility, allowing customers to upgrade without losing existing software.

2. Minicomputers

Minicomputers, also known as midrange computers, emerged in the 1960s as smaller, more affordable alternatives to mainframes. They were used by medium-sized businesses and research institutions. Notable minicomputers include:

- DEC PDP-1 (1960): One of the first minicomputers, known for its compact size and interactive capabilities.

- DEC PDP-8 (1965): A highly successful minicomputer, popular in research and education.

1.The Microprocessor Revolution

The invention of the microprocessor in the early 1970s by Intel engineers, including Ted Hoff and Federico Faggin, marked a significant turning point in computer history. The microprocessor integrated the central processing unit (CPU) onto a single chip, enabling smaller and more affordable computers. This innovation led to the birth of personal computers.

2. Early Personal Computers

The late 1970s and early 1980s saw the emergence of personal computers (PCs), designed for individual use. Key developments in this era include:

- Apple I (1976) and Apple II (1977): The first commercially successful personal computers, designed by Steve Wozniak and Steve Jobs.

- Commodore PET (1977): A popular personal computer known for its compact design.

- IBM PC (1981): IBM’s entry into the personal computer market, setting the standard for PC architecture and compatibility.

3. The Rise of Graphical User Interfaces (GUIs)

The development of graphical user interfaces (GUIs) in the 1980s revolutionized personal computing. GUIs allowed users to interact with computers through graphical elements like icons, windows, and menus. Notable milestones include:

- Apple Macintosh (1984): The first widely available personal computer with a GUI, designed to be user-friendly.

- Microsoft Windows (1985): Microsoft’s introduction of a GUI-based operating system for PCs, which became widely adopted.

1. The Internet and Networking

The development of the Internet in the late 20th century transformed the computing landscape. The Internet’s origins can be traced back to ARPANET in the 1960s, a U.S. Department of Defense project to connect computers for communication. By the 1990s, the Internet had become a global phenomenon, revolutionizing communication, information sharing, and business.

2. Mobile Computing and the Cloud

The 21st century has seen the rise of mobile computing and cloud technology. Mobile devices like smartphones and tablets have become ubiquitous, providing portable computing power. Cloud computing allows users to access data and applications remotely, reducing the need for local storage and computing resources.

3. Artificial Intelligence and Quantum Computing

Recent advances in artificial intelligence (AI) and quantum computing are pushing the boundaries of computing technology. AI applications, such as machine learning and natural language processing, are transforming industries, while quantum computing explores new frontiers in computational power and problem-solving.